One of the most popular features of SQL 4 CDS has proven to be the ability to change data using INSERT, UPDATE and DELETE statements. To that end, I’ve been looking at how to improve the performance of these types of queries. Whilst I’ve been doing this with a view to improving SQL 4 CDS, the same techniques should also apply to other SDK applications you might create. (Not plugins I’m afraid – more details on that later)

Say we’ve got a list of 5,000 records we need to update – what’s the best way of updating them?

Simple approach: Single updates

The simplest code we could write is something like:

foreach (var entity in toUpdate)

{

svc.Update(entity);

}

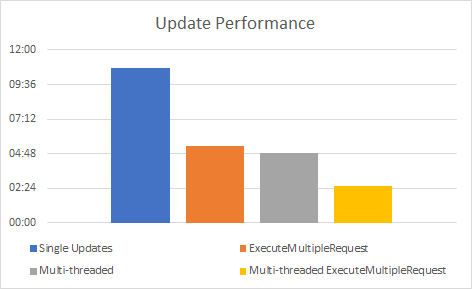

This works just fine, but isn’t the quickest. In my tests, running this in the UK against a UK (crm11) instance, this took over 10 minutes to complete. It feels like we can do better!

ExecuteMultipleRequest

At least some of the time taken for each request is waiting for the request to travel from my PC to the server and for the response to come back. In my case the network round-trip time to the server is about 20ms. If I could get rid of this for 5,000 requests that would save me 1:40.

I can do this by batching my requests with an ExecuteMultipleRequest:

var emr = new ExecuteMultipleRequest

{

Requests = new OrganizationRequestCollection(),

Settings = new ExecuteMultipleSettings

{

ContinueOnError = false,

ReturnResponses = false

}

};

const maxRequestsPerBatch = 100;

foreach (var entity in toUpdate)

{

emr.Requests.Add(new UpdateRequest { Target = entity });

if (emr.Requests.Count == maxRequestsPerBatch)

{

svc.Execute(emr);

emr.Requests.Clear();

}

}

if (emr.Requests.Count > 0)

{

svc.Execute(emr);

}

This breaks the requests up into batches of 100 and sends each batch in one go to the server. It adds a bit of complexity to the code, but actually gets an even bigger speed-up than from the network time alone – my 5,000 requests are now executed in half the time at 5:19!

Multi-threading

Both the examples so far have run all the requests one at a time, even though the second example sent them in batches. What if we try to run multiple requests concurrently? There’s more complexity again here, but there’s a good sample available on Microsoft Docs which shows how to use the Task Parallel Library and CrmServiceClient to do this safely.

Applying the changes to my code from this sample gets me a slightly better speed improvement compared to ExecuteMultipleRequest – my 5,000 records get updated in 4:52.

ℹ Important

If you try this approach, take note of the “Optimize Connection settings” section of the sample. Without these setting changes you won’t get the expected performance improvement from the rest of the parallel code.

You’re much more likely to run into the service protection limits when using multi-threading. Using the CrmServiceClient takes away a lot of the complexity of working with these. If you’re not using CrmServiceClient, be sure to take note of the errors that could be produced when you hit these limits and retry accordingly.

Combining Approaches

What if I combine these two and use multi-threading with each thread building and executing ExecuteMultipleRequests?

To do this, in the Parallel.ForEach setup you’ll need to create a thread-local ExecuteMultipleRequest as well as a clone of the CrmServiceClient:

Parallel.ForEach(toUpdate,

new ParallelOptions { MaxDegreeOfParallelism = 10 },

() => new

{

Service = svc.Clone(),

EMR = new ExecuteMultipleRequest

{

Requests = new OrganizationRequestCollection(),

Settings = new ExecuteMultipleSettings

{

ContinueOnError = false,

ReturnResponses = false

}

}

},

(entity, loopState, index, threadLocalState) =>

{

threadLocalState.EMR.Requests.Add(new UpdateRequest { Target = entity });

if (threadLocalState.EMR.Requests.Count == maxRequestsPerBatch)

{

threadLocalState.Service.Execute(threadLocalState.EMR);

threadLocalState.EMR.Requests.Clear();

}

return threadLocalState;

},

(threadLocalState) =>

{

if (threadLocalState.EMR.Requests.Count > 0)

{

threadLocalState.Service.Execute(threadLocalState.EMR);

}

threadLocalState.Service.Dispose();

});

And the result? It’s obviously much more complex code than we started with, but if you’re updating a serious number of records it seems well worth doing. The original 10+ minutes to update 5,000 records has now become a much better 2:34 – about 4x faster!

Summary

There’s some good performance gains to be had with some relatively minor changes to your code. Even better, most of what you need is already in the sample code online!

⚠ Warning

This is for external SDK apps. Don’t try this in plugins! ExecuteMultipleRequest and parallel execution are both explicitly listed as things not to do, and will cause your plugin to fail the solution checker as well as potentially causing errors at runtime.

Testing!

Your mileage will vary. Anything from the number of attributes you’re updating, network latency, plugins, what other users are doing etc. can have a big impact. Some of these might be mitigated more by one approach than another. The only way to know what works best for your scenario is to test it yourself! You can also try tweaking the batch sizes and degree of parallelism to see where the sweet spot is for you.

I really wish I had found this blog earlier.

Great post …

hello,

is the same applicable to Create as well, I can see you have shown the example for update, so what about when I create a new record with 10fields along(3 are lookups). Same improvement reflect here too?

Yes, I’d expect similar performance gains for Create and Delete as well as Update. If you need to capture the unique ID returned by the Create message you’ll need to be careful how you do that in a parallel loop, making sure you add them to a thread safe collection or using locking to avoid any errors.

This looks great, but does not seem to compile: see screenshot. I made one or two small changes but with or without those changes, it does not compile.

https://imgur.com/a/dJtfikT

Error CS1643 Not all code paths return a value in lambda expression of type ‘Func<Entity, ParallelLoopState, long, , >’ …

Thanks, I’ve updated the sample code now.

Hi Mark, thank you for this post, can this scenario works for Web API (rest) batch request ?, tried but seems to be inconsistence(random) when reading back the responses ( ..”ReadAsMultipartAsync..It does not have a content type header starting with ‘multipart/’ “…)?, whit out the parallel, is working fine. Thank you .

I haven’t tried this, but my guess is there’s something overlapping between two of the parallel threads. Are you sure each thread is using its own isolated request and response? Also, is there a reason you’re creating your own Web API requests rather than using the SDK?

Thank you Mark, I’ll check on the isolation ; there is no reason, it is a project(data feed) that I inherit in the company and it has been working fine and good for the last 3 years, just thinking into pump it up a little, but yes I’m planning to move everything to the new SDK now with all the new net core versions.

Hi Mark, I have tried the execute multiple method before in my program. I am wondering how do you know if all of the entities or the batch of that entity has been updated/created? I need this to track the progress. In my experience, when I used this method, I can’t track the progress.

I am aware that this method is faster. Even though, I need to be able to track the progress for information.

Ex.

The program is already executed and completed, yet the updating/creating process is still running whenever I refresh the entity records in D365.

Thank you in advance.

No, as far as I’m aware there is no way to get progress reporting while this method is executing. You could look for the expected effects of your requests taking place (e.g. if you’re doing updates, look for the

modifiedondate being changes on the records) but this would add extra load to your system.Great post . it works but I have noticed it never gets to the first loop where the request is is maxRequestsPerBatch. it fires before the threshold. I just added some code to monitor the progress

private static void UpdateinparallelForEach( IEnumerable entities)

{

Console.WriteLine(“Total Records to be Processed ” + entities.Count());

int progress = 0;

var paralleloptions = new ParallelOptions() { MaxDegreeOfParallelism = 3 };

CrmServiceClient crm365Service = null;

CrmServiceClient.MaxConnectionTimeout = new TimeSpan(5, 0, 0); //5 hours

crm365Service = new CrmServiceClient($@”AuthType=ClientSecret;url={serviceURL};ClientId={clientID};ClientSecret={secret}”);

Parallel.ForEach(entities,

paralleloptions,

() => new

{

Service = crm365Service.Clone(),

DMR = new ExecuteMultipleRequest

{

Requests = new OrganizationRequestCollection(),

Settings = new ExecuteMultipleSettings

{

ContinueOnError = false,

ReturnResponses = false

}

}

},

(entity, loopState, index, threadLocalState) =>

{

string firstname = GetRandomFirstName();

Entity MyEntity = new Entity(“cdcep_donationlog”);

MyEntity.Id = entity.Id;

MyEntity[“name”] = “hello”; //logic to get the updated fields

UpdateRequest updateRequest = new UpdateRequest();

updateRequest.Target = MyEntity;

threadLocalState.DMR.Requests.Add(updateRequest);

if (threadLocalState.DMR.Requests.Count >= 500)

{

//never gets here

threadLocalState.Service.Execute(threadLocalState.DMR);

threadLocalState.DMR.Requests.Clear();

Console.WriteLine(“Thread id executing the batch loop 1 is : ” + System.Threading.Thread.CurrentThread.ManagedThreadId);

Interlocked.Add(ref progress,500);

Console.WriteLine(progress);

}

return threadLocalState;

},

(threadLocalState) =>

{

if (threadLocalState.DMR.Requests.Count > 0 )

{

threadLocalState.Service.Execute(threadLocalState.DMR);

Console.WriteLine(“Thread id executing the batch loop 2 is : ” + System.Threading.Thread.CurrentThread.ManagedThreadId);

Interlocked.Add(ref progress, threadLocalState.DMR.Requests.Count);

Console.WriteLine(progress);

}

threadLocalState.Service.Dispose();

});

}

Interesting – presumably Parallel.ForEach is deciding that the thread should be finished before it gets to 500 requests per thread. You can see how many it does process in each thread with your logging in the finalizer callback.

Hi Mark Have you considered using the parallel.foreachasync and making use of the async capability of service client when updating and creating records. ?

No, I haven’t tried this yet. Please report back if you’ve tried it and got some results to share!

Hi Mark,

in terms of using the parallelization, how critical is it to have a localInit body which clones the serviceClient as a localThreadSvc.

Is this critical in achieving true parallelization? I ask this because, the parallel.foreachasync does not currently support localInit body OOTB…

Although untested, I suspect that we can get away with this by locally cloning in the execution body in a using statement.

await Parallel.ForEachAsync(myList, new ParallelOptions() { MaxDegreeOfParallelism = crmService.RecommendedDegreesOfParallelism },

async (listobj, _) =>

{

using (var svcClone = crmService.Clone())

{

await svcClone .ExecuteAsync(updateRequest);

}

});

thoughts?

That should work, but it won’t give you the option to batch requests within each thread as well. It’ll also give you more overhead of having to run

.Clone()on each request rather than only once per thread.Is the “Multi-threading” can it be done with the organization service? And not use the “CrmServiceClient” because i want to use this in an action

No, you can’t use the IOrganizationService in an action multi-threaded – see https://learn.microsoft.com/en-us/power-apps/developer/data-platform/best-practices/business-logic/do-not-use-parallel-execution-in-plug-ins

The number of batch size of multiplerequest make me confusing.

I did a test by change the size from 10 to 100, it turned out that the performance is best around 30. The test is with different environment with different MaxDegreeOfParallelism(from 5 to 120), in my assumption, the server with large MaxDegreeOfParallelism number should work better with more concurrent requests, but it’s not, with less batch size and more concurrent request, the performance is dropping quickly.

Great post! Was exactly what I needed.

On the topic of the MaxDegreeOfParalellism parameter, I found that the CrmServiceClient object has a RecommendedDegreesOfParallelism attribute that is supposed to provide the optimal number of concurrent threads for optimal processing. As I have been testing this parameter, I’ve been getting values ranging between 40-50. I’ve always been worried about using fixed values for things like max number of threads unless you go through extensive testing to determine the optimal value and does that optimal value change through out the day or over extended periods of time. At least with this parameter, it’s Dataverse saying that this is the optimal number of threads to use at this time. Here are some links to more detailed information.

https://learn.microsoft.com/en-us/dotnet/api/microsoft.xrm.tooling.connector.crmserviceclient.recommendeddegreesofparallelism?view=dataverse-sdk-latest

https://learn.microsoft.com/en-us/power-apps/developer/data-platform/send-parallel-requests?tabs=sdk

Hi Mark,

This post has been incredibly helpful, Thank you!!

I’ve written a console app to revoke access to around 60k contacts. Initially using the ExecuteMultiple request resulted in 5k contacts being processed in 26 minutes, pretty slow.

I decided to use your multi-threading example. Initially only 2.5k-3k records were being processed within 5 minutes before running into the following error. The request channel timed out attempting to send after 00:02:00.

After reviewing the connection optimization settings and adding them to the code, the performance improvement was fantastic. Now 5k records are processed in 2-3 minutes!

For anyone else doing this, i found the following examples useful.

https://learn.microsoft.com/en-us/power-apps/developer/data-platform/send-parallel-requests?tabs=sdk#optimize-your-connection

https://learn.microsoft.com/en-us/power-apps/developer/data-platform/send-parallel-requests?tabs=sdk#using-crmserviceclient-with-net-framework