A few months ago I investigated how using multi threading and batching requests can be used to improve the performance of Dataverse integrations. In the latest release of the Dataverse tooling we’ve now got another option to use – EnableAffinityCookie. Let’s have a look at how that can help too.

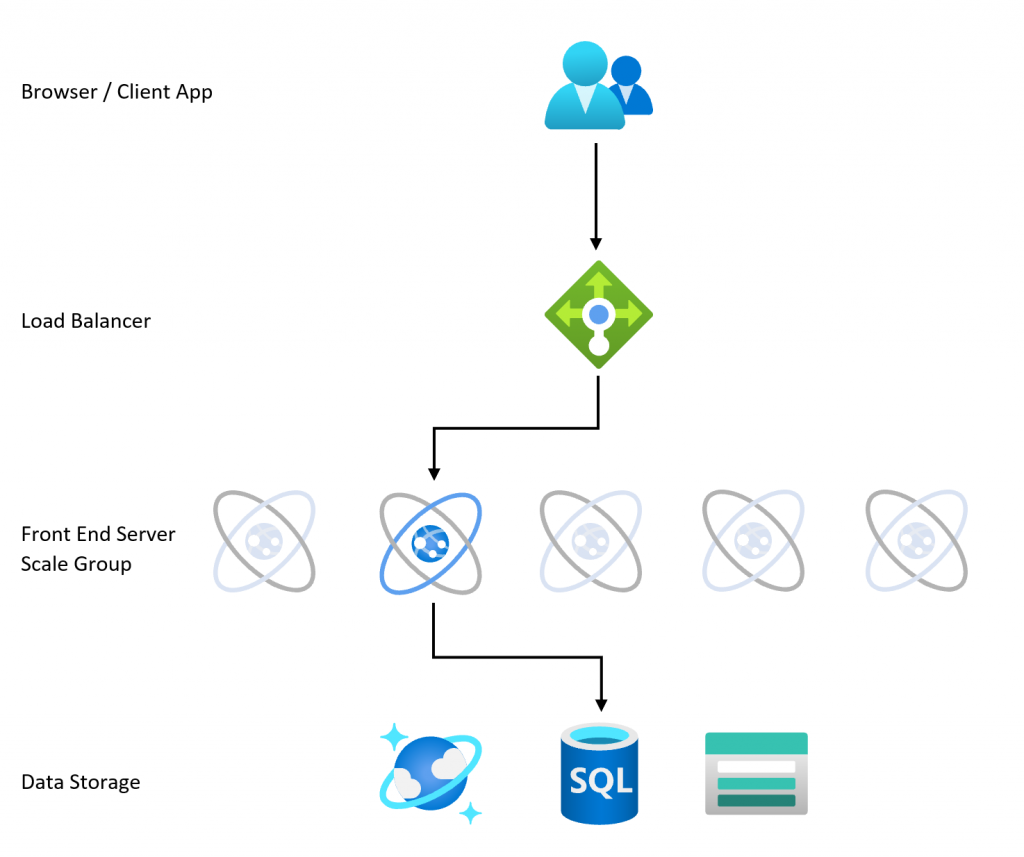

Any Dataverse instance is hosted across a lot of different servers, which you might see referred to as a scale group. In front of that there is a load balancer which directs each request to one of those servers.

When you’re using Dataverse as a regular user, you want each of your requests to go to the same server. This lets the server make best use of caching to improve performance from one request to the next.

In bulk data load scenarios however, this leads to a bottleneck. There are all these servers available, but we’re sending thousands of requests to only one of them and all the rest are sitting there idle. To stop any individual server getting overwhelmed there are service protection limits in place which throttle the number of requests that can be processed per server.

The load balancer ensures that each request goes to the same server by using a cookie. This “affinity cookie” is set on the first request to identify the server that was used, and the client automatically sends it on all subsequent requests to ensure they get routed to the same server.

Disabling the affinity cookie

In previous releases of the Dataverse tooling we could only disable the use of the affinity cookie globally in the application config file:

<appSettings> <add key="PreferConnectionAffinity" value="false" /> </appSettings>

This doesn’t fit well with tools that sometimes benefit from the affinity cookie and sometimes want to disable it. In the latest release though (9.1.0.79) we now have a new EnableAffinityCookie property available to enable or disable it dynamically:

svc.EnableAffinityCookie = false;

Performance impact

So what impact does turning the affinity cookie off really have?

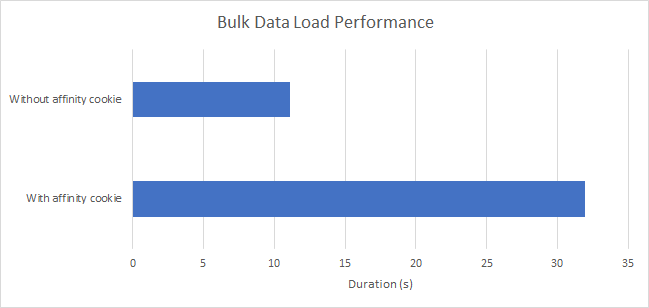

In my test I am loading 1,000 records. Following my own advice from my previous post I am splitting this into batches of 10 records and running up to 10 threads in parallel.

As you can see, this has a massive impact! My data load now goes through in roughly 1/3 of the time. The only change I had to make was inserting line 7 in my sample code below:

Parallel.ForEach(

Enumerable.Range(1, 1000).Select(i => new Entity("account") { ["name"] = $"Account {i}" }),

new ParallelOptions { MaxDegreeOfParallelism = 10 },

() =>

{

var org = _org.Clone();

org.EnableAffinityCookie = false;

return new

{

Service = org,

EMR = new ExecuteMultipleRequest

{

Requests = new OrganizationRequestCollection(),

Settings = new ExecuteMultipleSettings

{

ContinueOnError = false,

ReturnResponses = false

}

}

};

},

(entity, loopState, index, threadLocalState) =>

{

threadLocalState.EMR.Requests.Add(new CreateRequest { Target = entity });

if (threadLocalState.EMR.Requests.Count == maxRequestsPerBatch)

{

threadLocalState.Service.Execute(threadLocalState.EMR);

threadLocalState.EMR.Requests.Clear();

}

return threadLocalState;

},

(threadLocalState) =>

{

if (threadLocalState.EMR.Requests.Count > 0)

{

threadLocalState.Service.Execute(threadLocalState.EMR);

}

threadLocalState.Service.Dispose();

}

);

As always your situation may be different to my test case so please try it on your workload to get accurate results for you. In particular each environment can have a different number of servers available depending on whether it is a trial and how many licenses you have.

When not to use it

As with any tool, it’s as important to understand when not to use it as when to use it.

Thankfully Matt Barbour provided a great write-up of this on GitHub. In summary, disabling the affinity cookie is not appropriate when:

- making metadata changes

- issuing only a small number of requests

To make it worthwhile disabling the affinity cookie you must be making a substantial number of create/update/delete requests in parallel, otherwise you may actually see the performance decrease.

Hi Mark, thank you for the great post, do you think adding a lock(execute response) in the the threads to collect responses could be too expensive or bad idea in this scenario ?

You can now use serviceClient.RecommendedDegreesOfParallelism to control the number of threads in your parallel foreach loop, enabling you to automatically scale to all available server-side resources. See the docs an example: https://learn.microsoft.com/en-us/power-apps/developer/data-platform/send-parallel-requests